How Nation State Cyberespionage and Cyber Counterintelligence Campaigns May Be Normalizing Persuasive Technology Behavioral Design to Victimize US Military Communities

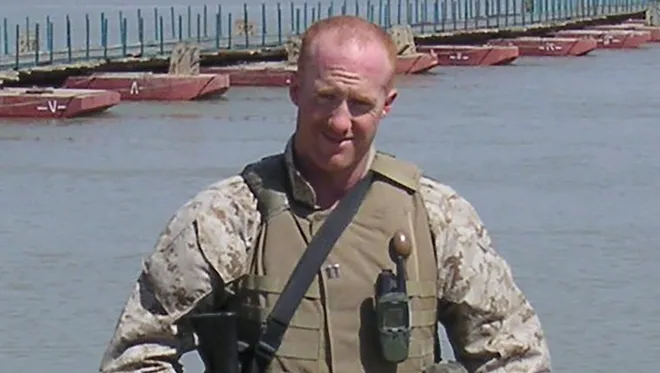

By Tim Pappa

Tim Pappa is a former Supervisory Special Agent and profiler with the Federal Bureau of Investigation’s Behavioral Analysis Unit. Tim specializes in cyber deception and online influence targeting cybercriminals and nation state groups.

There are relatively familiar Iranian cyber campaigns targeting US military personnel, but arguably those historical approaches mainly relied on attractive female sock puppets to lure US military personnel to click on malicious links or links to manipulated communication platforms.

The recent disruptions of suspected Iranian cyberespionage and cyber counterintelligence campaigns have highlighted the “sophisticated” technical approach of creating and maintaining consistent domains and sock puppets over a period of years to lure victims.

These are comparatively impressive campaigns, but there is more to say about how these campaigns appeared to exploit the behaviors of their victims.

The cyber victimology of both campaigns was somewhat different – Tortoiseshell or Imperial Kitten posed as aerobics instructors and tried to engage Western aerospace defense industry employees and current and former US military related to a program on health and diet during the pandemic, and APT42 attempted to lure Iranian job seekers interested in working in or for Israel in some commercial or even intelligence capacity during recent conflict between both countries. However, both campaigns demonstrated how persuasive technologies can drive our choices, so that we fail to scrutinize content and storylines online that we should question.

Persuasive technologies can be any software or hardware that we interact with online that influences our behaviors, which could include everything from fitness applications like Fitbit to games like Candy Crush to language learning applications like Duolingo, as examples.

When I was a previously a Supervisory Special Agent and profiler with the FBI’s Behavioral Analysis Unit (BAU), I routinely provided operational recommendations to cyber investigators. These behaviorally based recommendations concentrated on the victimology of the targeting offenders, meaning who they were victimizing and how they were victimizing provided some insight on how vulnerable they might be to an approach online or some kind of communication. That analysis would often include application of frameworks like persuasive technologies to consider demonstrated behavior online from another perspective.

This recent Iranian cyberespionage and cyber counterintelligence activity may be a behavioral model of futurist Iranian approaches targeting US military communities.

This article will briefly describe these contemporary suspected Iranian nation state campaigns and then characterize their application within the framework of persuasive technology research. This article will also explore how that research and application has continued to shift toward coercive or deceptive application of persuasive technology, and how this will likely continue to be the most normalized design approach for nation state cyberespionage and counterintelligence.

Characterizing these campaigns as a concentrated behavioral targeting environment

Proofpoint detailed in a report in approximately July 2021 how suspected Iranian actors Tortoiseshell or Imperial Kitten operationalized a social media sock puppet believed to be an aerobics instructor and fitness influencer of some sort, to build a relationship with the employee of a small subsidiary of an aerospace defense contractor.

That exchange over several years eventually led to an email message containing a malicious OneDrive URL that was characterized as a diet survey for the victim to fill out.

Screenshot of the diet survey in the malicious OneDrive URL from the Proofpoint report.

The email message below from this sock puppet to the victim apparently reflected the consistent interaction between the “flirty” sock puppet and the victim.

The language in this email may also be what might be expected of the sock puppet who worked in fitness and whose first language may not be native English, based on her social media profile.

Screenshot of the email message containing the OneDrive URL from the Proofpoint report.

The focus on diet and health, especially during the COVID pandemic was not only timely, but responsive to what many people were thinking about at the time and a topic that most users would not scrutinize as potentially malicious. There may have been a higher familiarity with sharing content and email communication during the pandemic because most people were home, so a OneDrive link from someone familiar certainly appears safer than most links people might be wary of.

Facebook at the time (now Meta) took down approximately 200 additional sock puppet accounts that appeared to be targeting military and civilian personnel in the same or related industries with a similar approach, including masquerading cloned or fake employment portals for government jobs. These sock puppets were job recruiters, which again may have been considered timely as many people during the pandemic were seeking change in their place of employment in response to needs related to individual and family health.

The more recent campaign attributed by Mandiant to APT42 also masqueraded as job recruiters to attempt to lure anyone in Iran who might be willing to bring their cyber expertise to Israel.

The content was written in Farsi and primarily found on Iranian social media and Iranian domains, asking for users to provide contact information and background as potential recruits for the kinds of employment opportunities advertised on these platforms.

Screenshot of one of the Farsi-language job recruitment websites advertising employment in Israel.

While this campaign is believed to have been active for more than approximately seven years, the continued operationalization of this campaign even during some of the heightened conflict between Iran and Israel in the past year is arguably concentrating collection on people who may truly be willing to cooperate with Israel during this period, despite attention to the conflict. When there is heightened conflict between these nations, too, there may arguably be a smaller number of people willing to reach out to firms or people in presumably Israel to risk sharing their expertise with Israel despite potential costs.

This may have been a campaign that ultimately functioned better as an educational deterrent, as some people shared this content in Iran suggesting Israeli intelligence may have been behind it.

Persuasive technology research and application shifting to deceptive uses?

Social scientist B.J. Fogg first named persuasive technology, which he defined as any interactive computing system designed to change or maintain peoples’ attitudes or behaviors.

Fogg coined the phrase “captology” to characterize the limited space at the time in the early 2000s where technologies and persuasion overlapped in peoples’ lives, anywhere there was human-computer interaction. Fogg emphasized that captology focuses on the planned persuasive effects of the technology, not on the ‘side effects’ of that technology.

Fogg recognized the social environment online and offline also shaped how responsive a user with complex behaviors might be to suggestions built into persuasive technology, but he wanted to know if how motivated someone was to perform a behavior or if they were capable of performing that behavior made more of a difference than the persuasive technology. Ultimately, threat actors like these Iranian cyber groups plan on victimizing the handful of US military personnel who may be more motivated than their peers to use some persuasive technology for some purpose, such as communicating back home to family or finding love on a dating platform.

But beyond those kinds of psychological underpinnings, Fogg was intent on mostly health-related persuasive technologies not being used in a coercive or deceptive way.

However, the development and application of persuasive technologies for deception and influence has largely muted Fogg’s concern.

Information systems science researchers Harri Oinas-Kukkonen and Marja Harjumaa in the process of developing a systematic framework for designing persuasive systems found that whether a persuasive technology is characterized as coercive or deceptive makes little difference, as peoples’ attitudes and behaviors can be shaped or changed or reinforced with persuasive technology, whether deception or coercion is designed into that technology or not.

Machine reasoning researcher Timotheus Kampik argued that most researchers continue to find also that the concept of deception is the root of much persuasive technology and artificial intelligence research and application, so that deception in persuasive technology not only occurs on purpose, but also “occasionally and unintentionally”.

Deception is generally defined as creating erroneous sensemaking in someone so that they make a decision that is advantageous to the deceiver.

Kampik expanded the range of persuasive technology applications, exploring knowledge sharing websites like Stack Exchange, video sharing platforms like YouTube, and enterprise instant messaging applications like Slack and source code sharing site GitHub.

In another example, Kampik analyzed subscription news media sites, such as the Washington Post online. When potential users see headlines written to attract readers, sometimes the written content that must be subscribed to for users to read does not reflect the headline entirely. Kampik suggested this is a form of deception in the persuasive technology of that online news content site and subscription service, even if this characterization is less recognized.

These applications all reflect persuasive technology design, where the design of those applications is meant to drive engagement by anticipated users based on several motivations.

‘Substantially boosting’ the influence of persuasive technology on targeted users with adaptability and personalization

Health informatics and human-computer interaction researcher Shlomo Berkovsky argued that the application of personalization in persuasive technologies could “substantially boost” the impact of those technologies on someone’s behavior.

Personalized feedback motivating users to quit smoking in one study he referred to was found to result in increased rates of smokers quitting. The more frequent the interaction with the user, the more persuasive that technology appears to be. This is slightly different than gamifying participation in an application online from how many miles you ran or walked, for example, which is still a form of persuasive technology.

Both campaigns appeared to be approached as a pathway of contact and frequent online interaction before weaponizing some file to exploit the target.

Even in a gamified environment such as mobile applications for cyclists, there is a shared narrative in the aggregating of cycling data and biked courses, and in the relationships with or identification with those virtual and offline communities and people. The persuasive technology of the mobile application is not only adapted and personalized to the cyclist, but the cyclist is likely influenced by what others share from their warranted data on their cycling times and biked courses, whether they are on the other side of the world or living in the same city.

The engagement with aerospace industry employees with a health and diet program that involved initial or regular input in a journal is a demonstration of attempting adaptivity and personalization in persuasive technology design. The dimension in terms of timing and platform of the personalization was less important than the content of the message or communication for that intended user, which was a trusted fitness influencer who had been discussing health and diet with her intended target in the aerospace defense industry during the pandemic.

The increasingly “always-connectedness” of our social media accounts and applications will continue to consistently shape the frequency of our exposure to communication and content designed just for us and generally what we or people like us are looking for in applications.

Advertisements tailored to a user’s personality traits were also evaluated positively in some studies Berkovsky referred to, when they mirror a user’s motives.

While we do not know if Iranian counterintelligence considered this correlation, there is a palpable foundation in counterintelligence framing of insider and outsider threats that suggests if someone is willing to risk contact even with a country considered to be hostile, then perhaps they are willing to risk more, including espionage.

Gamification researcher Juho Hamari found in a meta-analysis of gamification and persuasive technology empirical studies that users of both are more responsive to that persuasive technology for example if they are already interested or trying to accomplish the same behavior, but have found it difficult with that interface.

Screenshot from the Mandiant report of one of the controlled candidate instruction portals.

Human-computer interaction researcher Maurits Kaptein characterized some of this as “implicit profiling”. In the context of Iranian cyber counterintelligence, this would be “implicit profiling” of the undefined pool of people who may be more likely than others to considering leaving Iran and working for Israel or sharing their sensitive knowledge with Israel in some capacity. Understandably, Iranian counterintelligence is concerned about people like this.

This article introduced the framework of persuasive technology so that you might understand how we see this in most software and applications we interact with.

Rather than projecting the folkloric threats of Iranian cyberespionage and counterintelligence, this article suggests that understanding this design that Iranian campaigns may be normalizing can help us understand their approach and how we might diffuse it.

Disclosure of Material Connection: Some of the links contained on this site are “affiliate links.” This means if you click on the link and purchase the item, we will receive an affiliate commission. This revenue is used to offset costs associated with maintaining this site. We only recommend products or services I use personally and believe will add value to my readers. We are disclosing this in accordance with the Federal Trade Commission’s 16 CFR, Part 255: “Guides Concerning the Use of Endorsements and Testimonials in Advertising.”

This site is free for everyone to learn about information warfare, connect with mentors, and seek the high ground! Unfortunately operating the site is not free and your donations are appreciated to keep KTC up and running. Even a five or ten dollar donation helps.